Kubernetes has become a household name in the tech world, and for good reason. As an open-source container orchestration tool, it helps businesses deploy, manage, and scale applications with ease. Multi-cloud strategies, where companies use multiple cloud providers, are now a common approach for modern enterprises. They offer flexibility, improved reliability, and the ability to avoid vendor lock-in. However, managing these deployments across different platforms can quickly get complicated. That’s where Kubernetes steps in. By streamlining processes and reducing complexity, it empowers teams to focus on delivering value rather than wrestling with infrastructure challenges.

Understanding Multi-Cloud Deployments

Multi-cloud deployments have become a cornerstone in modern IT strategies. By using services from multiple cloud providers, businesses can tailor their approach to meet unique challenges and goals. But why is this approach so compelling for enterprises, and what hurdles do they need to overcome? Let’s explore.

Defining Multi-Cloud Strategy

A multi-cloud strategy involves utilising services from more than one cloud provider, such as AWS, Microsoft Azure, and Google Cloud. Unlike hybrid cloud solutions, which focus on blending private and public cloud environments, multi-cloud strictly encompasses multiple public or private clouds from different vendors. Think of it as having several tools in your toolbox, each chosen for its specific strength.

With this approach, businesses avoid relying solely on one provider for all their needs. It allows organisations to adopt the right tools for specific workloads, whether that’s superior machine learning models on one platform or robust storage capabilities on another. While hybrid cloud prioritises integration across private and public infrastructures, multi-cloud casts a broader net, ensuring optimal flexibility and choice.

Why Enterprises Choose Multi-Cloud

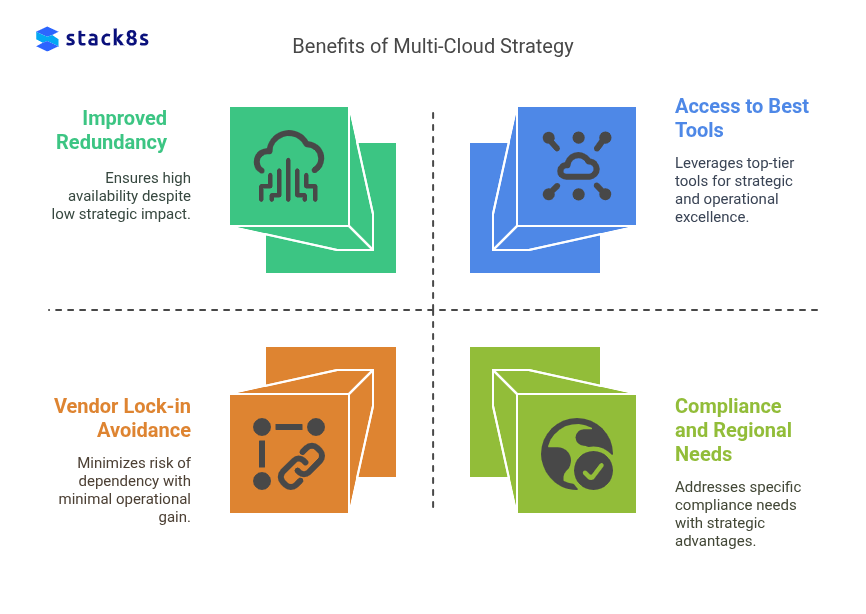

Why not stick with one cloud provider? After all, wouldn’t that simplify operations? For many businesses, the trade-offs of a single-cloud setup outweigh the convenience. Here’s why enterprises are increasingly opting for a multi-cloud approach:

- Avoiding Vendor Lock-in: Relying on one provider can put businesses in a vulnerable position. What if prices increase, service quality declines, or features lag behind competitors? With multi-cloud, companies maintain the freedom to pivot when needed.

- Improved Redundancy: Outages happen—even to the biggest cloud providers. Distributing workloads across multiple platforms ensures systems stay online, even if one provider experiences issues.

- Access to the Best Tools: Each cloud provider has areas where they excel. For instance, AWS might offer great database options, while Google Cloud specialises in AI services. A multi-cloud approach lets companies pick the best tool for the job.

- Compliance and Regional Needs: Different providers offer better support for compliance regulations or data residency requirements, especially across international markets.

The ability to mix and match services gives businesses an edge, fostering innovation and ensuring operations are both resilient and flexible.

Challenges of Multi-Cloud Environments

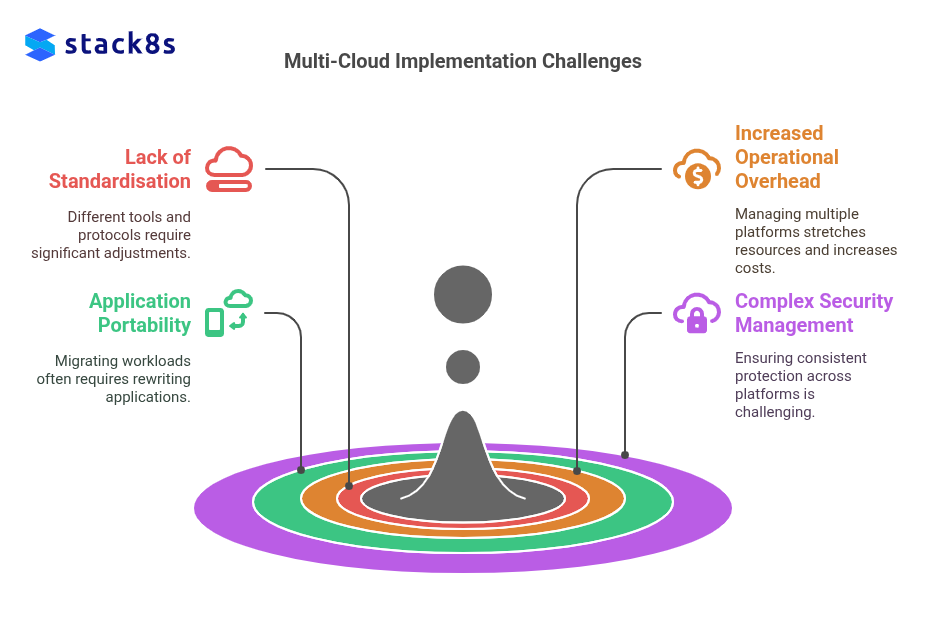

While the benefits are clear, multi-cloud environments can’t be implemented without some challenges. Managing multiple providers isn’t as simple as flipping a switch. Let’s explore the most common obstacles:

- Lack of Standardisation: Each cloud provider has its own set of tools, APIs, and protocols. Application deployment processes that work on one platform may need significant tweaking to function on another.

- Increased Operational Overhead: Juggling multiple platforms means more monitoring, configuration, and management. Teams need specialised skills for each provider, which can stretch resources thin and increase costs.

- Application Portability: Migrating workloads between clouds can be tricky. A lack of compatibility often means re-writing parts of an application to make it work across providers.

- Complex Security Management: Each platform comes with its own security settings, permissions, and monitoring tools, requiring teams to ensure consistent protection across environments.

Despite these complexities, advancements in container orchestration tools like Kubernetes are smoothing out many of these rough edges. They offer a unified way to manage infrastructure and simplify deployments across diverse clouds. Still, understanding these challenges is crucial before diving into a multi-cloud strategy to ensure proper planning.

Multi-cloud deployments are powerful but come with their own set of hurdles. For enterprises, finding the right balance between efficiency, flexibility, and manageability is key. In this context, Kubernetes emerges as a valuable ally, capable of simplifying operations while mitigating the pains of handling multiple clouds.

Kubernetes: The Foundation for Multi-Cloud Deployments

Kubernetes has become a cornerstone for organisations pursuing multi-cloud strategies. Its ability to simplify the complexities of managing applications across various cloud environments is unmatched. By providing a consistent layer for deploying and scaling applications, Kubernetes takes the guesswork out of multi-cloud operations. Whether you're juggling AWS, Azure, Google Cloud, or smaller platforms, it ensures that technology teams stay in control without being bogged down by platform-specific quirks.

How Kubernetes Abstracts Cloud Complexities

Managing workloads directly on multiple cloud platforms often feels like juggling different pieces of a complicated puzzle. Each provider has its own tools, APIs, and processes that don't always play well together. This lack of standardisation can derail teams, slowing progress and adding frustration. Kubernetes solves this problem by acting as a unifying layer.

At its core, Kubernetes creates a uniform environment for deploying and operating workloads, no matter the underlying cloud provider. Think of it as a translator—a single language that works across all cloud platforms. This abstraction enables developers to write, package, and deploy applications in the same way, regardless of where they'll be running.

What’s more, Kubernetes minimises dependencies on any single vendor. Instead of tying your applications to the specifics of AWS or Azure, Kubernetes decouples them from the underlying infrastructure. This ensures you’re not locked into one provider, protecting your flexibility and investment in the long term. You can even mix and match clouds, taking advantage of each one's unique strengths without worrying about compatibility headaches.

Streamlining Application Portability with Kubernetes

One of the biggest multi-cloud challenges is moving applications from one provider to another. It shouldn't feel like packing up and moving house every time you switch providers, right? Kubernetes makes this easier by working hand-in-hand with containers.

Containers, such as those built with Docker, provide lightweight, portable environments that package everything an application needs: code, libraries, and dependencies. Kubernetes orchestrates these containers, ensuring they run smoothly across any environment. Essentially, it picks up your application, as-is, and places it wherever you need. Running these workloads on Google Cloud today but considering a switch to AWS tomorrow? Kubernetes ensures that migration won’t require rewriting large chunks of your code.

This portability also simplifies the development process. Developers can focus on building applications without fretting over which cloud will host them later. Kubernetes ensures the deployment process is predictable, repeatable, and seamless—even when moving across clouds. This kind of flexibility is what makes multi-cloud strategies not only possible but realistic for growing businesses.

Monitoring and Managing Multi-Cloud Workloads

Keeping track of workloads across multiple clouds is a logistical nightmare without the right tools. Different dashboards, metrics, and methods for handling resources can quickly overwhelm even the sharpest teams. Kubernetes steps in here too, providing a centralised way to manage workloads, regardless of where they’re running.

By using Kubernetes, you can allocate resources like CPU, memory, and storage across multiple clouds efficiently. Need more capacity for a growing app in one region? Simply let Kubernetes handle the load-balancing, scaling, and failover. Its self-healing capabilities ensure that workloads are automatically rescheduled if something goes wrong, minimising downtime.

But resource management is just one piece of the puzzle. Kubernetes also offers built-in monitoring tools to keep tabs on performance. Whether you use its native tools or plug in solutions like Prometheus or Grafana, you can monitor the health, stability, and efficiency of your workloads from a single dashboard. This consolidated view is crucial when you're dealing with the complexities of multiple cloud providers.

In this way, Kubernetes transforms multi-cloud management into a streamlined process. Instead of constantly firefighting or switching between provider-specific tools, teams can rely on Kubernetes as their control centre. It puts everything you need into one place, enabling you to monitor and optimise your applications with confidence.

Key Kubernetes Features That Enable Multi-Cloud Deployments

Kubernetes is packed with features that simplify multi-cloud deployments, making it a favourite among forward-thinking organisations managing applications across multiple platforms. Its ability to bridge gaps between cloud providers ensures consistency, security, and scalability without unnecessary headaches. Let’s take a closer look at some of the key features that make Kubernetes so effective.

Helm Charts for Simplified Deployment

Deploying an application in a multi-cloud setup can feel overwhelming. How do you ensure consistency across environments? This is where Helm charts come in. Think of them as templates or blueprints for your Kubernetes applications. Instead of manually configuring each deployment, Helm charts handle it for you, streamlining the process.

With Helm, you define a single configuration that can be reused across all cloud environments. Need to deploy a web app on both AWS and Google Cloud? No problem. Helm standardises the setup, so it behaves exactly the same on each platform.

You can also customise these templates as needed. For example, you might tweak storage settings for one cloud provider while keeping everything else consistent. This flexibility allows teams to quickly adapt, without starting from scratch for every cloud environment. It's like having one master recipe but adjusting the seasoning based on local tastes.

By simplifying deployment and reducing human errors, Helm accelerates application rollouts and ensures smooth multi-cloud operations.

Service Mesh for Enhanced Communication

Imagine the chaos of multiple applications trying to talk to each other across different cloud providers. Without the right tools, ensuring secure, reliable communication can feel like untangling a box of wires. That’s where service mesh tools, like Istio, come into play, and they integrate seamlessly with Kubernetes.

A service mesh creates a communication layer for distributed microservices. It manages data flow, enforces security policies, and helps with service discovery—whether the services are running on AWS, Azure, or any other platform. Essentially, it’s your application’s traffic control centre.

Take security, for instance. With Istio, you can establish encrypted connections between services across clouds. This keeps sensitive data protected, even when it’s travelling across different networks. Or consider load balancing—service mesh tools dynamically route traffic to healthy instances, even if they’re on different providers.

The result? Your applications can communicate smoothly and securely, no matter where they’re deployed. By removing the guesswork of cross-cloud communication, service meshes help eliminate a major hurdle in multi-cloud setups.

Kubernetes Federation for Multi-Cloud Coordination

When managing multiple Kubernetes clusters across various cloud platforms, keeping everything in sync can snowball into a full-time job. Kubernetes Federation is designed to make this process far less painful by providing a single control plane for coordinating clusters across clouds.

What does this mean in practice? Imagine your company operates clusters on Azure in Europe, AWS in the US, and Google Cloud in Asia. With Kubernetes Federation, you can manage these clusters as one unified system. Need to deploy an app across regions? Instead of configuring each cluster manually, Federation lets you apply the same configurations globally. It’s like having an orchestra, but with one conductor ensuring every instrument plays in harmony.

Another benefit is disaster recovery. If a cluster in one region fails, Federation ensures applications and workloads can fail over to another cluster automatically. This kind of redundancy is crucial for high availability.

By centralising control, Kubernetes Federation cuts down on complexity, saving time and reducing the chances of errors creeping into your deployment management.

Role-Based Access Control (RBAC) for Secure Multi-Cloud Operations

Security across diverse environments is a top concern for any team managing multi-cloud strategies. That’s where Kubernetes’ Role-Based Access Control (RBAC) becomes a key player. It ensures that only the right people, services, or applications have access to specific resources.

RBAC is like a bouncer at the door of your deployment operations—only authorised users or systems are allowed in. For instance, maybe a junior developer needs access to staging environments but shouldn’t touch production workloads. With RBAC, you can create rules that enforce these boundaries across every Kubernetes cluster, regardless of the underlying cloud provider.

In multi-cloud scenarios, RBAC’s consistency is invaluable. You avoid the risk of accidentally applying looser permissions on one platform than another. Set the rules once, and you’re good to go—even as your environments scale or change.

Add to this the fact that RBAC works seamlessly with other security frameworks and tools. You can integrate cloud provider identity services, audit access logs, and ensure compliance with regulations—all without overhauling workflows.

RBAC makes securing multi-cloud setups far less daunting. It’s robust, adaptable, and, most importantly, keeps everyone from your team to your workloads out of harm’s way.

Best Practices for Using Kubernetes in Multi-Cloud Deployments

Kubernetes has proven to be a powerful tool for managing multi-cloud deployments, but using it effectively requires more than just setting it up. By following some best practices, businesses can unlock its full potential to create cloud-agnostic applications, optimise resource usage, and ensure high availability. Let's dive into a few essential strategies.

Building Cloud-Agnostic Applications

Designing applications that run seamlessly across different cloud providers is the cornerstone of a successful multi-cloud approach. Kubernetes makes this possible, but it still requires thoughtful planning.

- Containerisation is Key: Build applications as containerised workloads. Containers package your app with everything it needs to run, from libraries to dependencies, ensuring consistency across all environments. This eliminates the dreaded “it works on my machine” problem.

- Avoid Cloud-Specific Services: Stick with open-source tools or services that work across all platforms. For example, use Kubernetes-native storage solutions instead of a platform-specific one like AWS EBS or Google Persistent Disks. This minimises rework if you switch providers.

- Abstract Configuration Management: Use tools like Helm to manage your application deployments. With reusable configurations, you can tailor workloads for any cloud without starting from scratch. It's like having a universal adaptor for the hardware of your home.

- Standardise APIs for Communication: Ensure that communication between microservices is based on standardised APIs. This allows service interactions to remain unchanged, whether they’re running on Azure, AWS, or both.

By prioritising portability in your design choices, you’ll save time, reduce errors, and maintain flexibility as your cloud strategy evolves.

Effective Resource Management

Resource management isn’t just about cost control; it’s about ensuring your applications perform reliably without wasting money. In multi-cloud environments, Kubernetes gives you the tools to optimise resources, but it’s up to you to use them wisely.

- Autoscaling for Cost Efficiency: Kubernetes’ Horizontal Pod Autoscaler dynamically adjusts the number of running pods based on demand. This means you’re not paying for unused capacity during quiet periods, but you’re ready to scale up instantly during traffic spikes.

- Use Resource Requests and Limits: Define requests (minimum resources) and limits (maximum resources) for CPU and memory in your deployments. This prevents one workload from hogging resources at the expense of others in a shared infrastructure.

- Monitor and Allocate Across Clouds: Use tools like Prometheus and Grafana to monitor how resources are being consumed across providers. This visibility helps identify underused or underperforming resources, allowing teams to reallocate workloads effectively.

- Spread Workloads Strategically: Take advantage of different pricing models offered by clouds. For example, you might run non-urgent batch jobs on a lower-cost provider while keeping latency-sensitive workloads on a premium service.

Resource optimisation in a multi-cloud deployment is like balancing your household budget. You allocate funds where they’re needed most, ensuring nothing is wasted and everything runs smoothly.

Ensuring High Availability and Disaster Recovery

A key reason to use a multi-cloud strategy is to improve resilience. Kubernetes offers a range of options to ensure uptime and safeguard against unexpected failures, but proper implementation is critical.

- Multi-Cluster Failover: Deploy applications across multiple Kubernetes clusters on different providers. Use tools like Kubernetes Federation or custom failover scripts to redirect traffic to healthy clusters if one goes down. This redundancy ensures your systems stay online, even during regional outages.

- Data Replication Across Clouds: For databases, replicate data across clouds to avoid platform dependencies. Tools like Vitess or CockroachDB integrate with Kubernetes to offer multi-cloud data replication and sharding, reducing the fallout of cloud-specific failures.

- Health Checks and Auto-Healing: Kubernetes’ built-in liveness and readiness probes constantly check the health of applications. If an issue is detected, Kubernetes restarts failing pods automatically, reducing downtime without manual intervention.

- Disaster Recovery Playbooks: Document failover and recovery procedures. Even with Kubernetes simplifying much of this process, human oversight is still needed for complex scenarios. A clear, tested plan can make the difference between a brief hiccup and prolonged downtime.

Think of high availability and disaster recovery planning like preparing for stormy weather. You hope you won’t need to use your safety measures, but when the storm hits, you’ll be glad you planned ahead.

Kubernetes provides the tools to make multi-cloud deployments easier, but real success lies in how you use them. Whether it’s designing resilient applications, managing resources efficiently, or ensuring uptime, each best practice helps you harness the full potential of Kubernetes in a multi-cloud environment.

Case Studies: Real-World Use of Kubernetes in Multi-Cloud

Many organisations have turned to Kubernetes to manage their multi-cloud operations effectively, reaping the benefits of flexibility, scalability, and security. While theory helps us grasp "how it works," nothing beats seeing Kubernetes in action. Let’s examine real-world examples to understand its impact and discover valuable lessons for multi-cloud success.

Case Study: E-Commerce Platform Scaling Across Regions

An international e-commerce company, let’s call them "ShopSmart," faced a big challenge managing peak shopping seasons like Black Friday and global shipping promotions. Their customers, spread across Europe, Asia, and the Americas, required fast, localised experiences, but relying on a single cloud provider introduced latency issues and risked outages affecting their entire platform.

By adopting Kubernetes, ShopSmart balanced their application delivery across AWS, Google Cloud, and Azure. Here’s how they did it:

- Fast Regional Deployment: Kubernetes allowed ShopSmart to spin up application instances closer to their customers. For example, customers in Europe were served via an Azure data centre, reducing page load times noticeably.

- Burst Scalability: During peak hour flash sales, Kubernetes’ autoscaling feature ensured resources were dynamically deployed to handle the traffic surge. No more site crashes or frustrated customers.

- Simplified Updates: ShopSmart used Helm charts for deployment consistency. This made rolling out features and bug fixes seamless across all regions—no downtime, no inconsistencies.

The result? ShopSmart managed to increase customer satisfaction by 35%, boosted revenue during peak shopping periods, and halved their downtime compared to previous years. They proved that Kubernetes is a powerful enabler of reliable, scalable multi-cloud e-commerce operations without tying the business to a single provider.

Case Study: Financial Institution Securing Critical Workloads

Financial service companies operate under strict compliance rules, often juggling high-stakes workloads while adhering to ever-changing security standards. A European bank—let’s call them "FinBank"—needed to safeguard customer data while deploying across multiple cloud providers to meet regional requirements for data sovereignty.

Here’s how Kubernetes transformed FinBank’s infrastructure:

- Compliance with Data Protection Laws: By leveraging Kubernetes clusters across AWS (US), Google Cloud (EU), and a private cloud for their Asia operations, FinBank ensured sensitive data never left its legal jurisdiction. Kubernetes' configuration flexibility allowed them to enforce these boundaries with ease.

- Advanced Security Through Service Mesh: FinBank adopted Istio as their service mesh to manage communication between microservices. This encrypted traffic across clusters, improving security while enabling smooth data exchange between clouds.

- High Availability: By distributing workloads across multiple clouds, Kubernetes ensured FinBank’s critical operations were resilient against regional provider outages. For example, if AWS faced downtime, Kubernetes smartly redirected traffic to healthy clusters in Google Cloud.

By embracing Kubernetes, FinBank achieved 99.999% uptime and met compliance expectations in every market they served. What’s more, they reduced operational overhead by centralising workload management, allowing their IT teams to focus less on firefighting and more on innovation.

Lessons Learned from Multi-Cloud Implementations

While the benefits of Kubernetes for multi-cloud strategies are clear, there are essential lessons we can draw from these examples. Here are key takeaways:

- Plan for Portability: Both ShopSmart and FinBank reaped success because they didn’t lock their workloads into any single cloud-specific services. Stick to open technologies and Kubernetes-native features to ensure flexibility.

- Distribute Strategically: Multi-cloud doesn’t just mean using multiple clouds; it’s about making thoughtful choices. Place workloads closest to your users for better performance, or across regions for regulatory compliance and redundancy.

- Use Service Meshes for Security: Managing multiple clouds introduces potential points of failure within microservice communication. Tools like Istio can simplify this while ensuring encrypted, efficient traffic flows.

- Keep Operations Centralised: Juggling multiple provider-specific dashboards can quickly become unmanageable. Kubernetes provides a single control point for cluster management, resource allocation, and monitoring, saving time and reducing errors.

- Test Disaster Recovery Plans: ShopSmart and FinBank both benefited from strategic failover mechanisms. Build redundancy into your setup and regularly test failover processes to ensure vulnerabilities don’t catch you off-guard.

- Adopt Autoscaling Smartly: Utilise Kubernetes’ autoscaling features to handle traffic spikes without overcommitting resources. This not only keeps operational costs predictable but also ensures smooth user experiences during high-demand periods.

Real-world implementations showcase the immense potential of Kubernetes in simplifying and enhancing multi-cloud deployments. Whether it’s scaling globally, securing sensitive workloads, or maintaining compliance, the versatility and reliability of Kubernetes make it a vital tool for modern enterprises.

Conclusion

Kubernetes has proven itself as a powerful ally for managing multi-cloud deployments. It simplifies the complexity of working across multiple providers, offering solutions for portability, resource management, and consistent operations. By creating a unified layer, it frees teams from vendor lock-in and streamlines infrastructure management.

For businesses navigating the challenges of multi-cloud strategies, Kubernetes delivers both flexibility and control. Its ability to standardise workloads, enhance scalability, and ensure high availability makes it an essential tool for modern enterprises.

Now is the time to explore how Kubernetes can transform your cloud approach. Could it be the key to unlocking smoother, more efficient operations? Think about your current challenges and how Kubernetes might simplify them.

Ready to Elevate Your Cloud Strategy?

Let’s talk about how Kubernetes can transform your projects and operations.

Contact Us